You want search engines

to ignore duplicate pages in your website If you do not want your internal

search results pages to be indexed then If you want search engines not to index

some of your directed pages, then If you want to not index some of your files

like some images, PDFs etc. then If you want to tell the search engines where your

sitemap is located then.

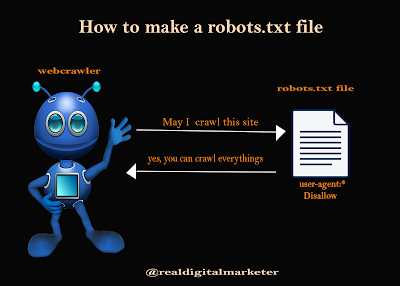

How to make a

robots.txt file?

If you have not yet

created a robots.txt file in your website or blog, then you should make it very

soon because it is going to prove very beneficial for you later. To make it,

you need to follow some instructions:

Firstly, create a text

file, and after that save it as robots.txt. For this, you can use Notepad if

you use Windows or Macs then you can save text edit according to you.

Now upload it in the

root directory of your website. Which is a root level folder and is also called

"htdocs" and it appears after your domain name.

If you use subdomains

then you need to create separate robots.txt file for all subdomain.

Where does the

Robots.txt file reside in the website

If you are a WordPress

user, it resides in your site's Root folder. If this file is not found in this

location, the search engine bot starts indexing your entire website. Because

the search engines do not search your entire website for the bot Robots.txt

file.

If you do not know if

your site has a robots.txt file? So, in the search engine address bar all you

have to do is type it - example.com/robots.txt A text page will open in front

of you as you can see in the screenshot.

This is the Robots.txt

file of realdigitalmarketer. If you do not see any such txt page, then you have

to create a robots.txt file for your site.

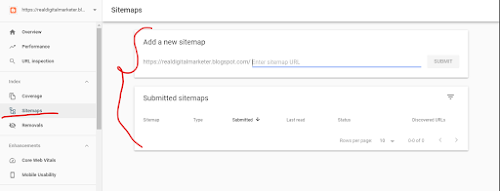

Apart from this, you

can check it by going to Google Search Console tools.

The basic format of the Robots.txt file is very simple and looks like this,

These two commands are

considered a complete Robots.txt file. However, a robots file can contain

multiple commands of user agents and directives (disallows, allows,

crawl-delays, etc.)

Preventing all Web

Crawlers from indexing websites

Using this command in

the Robots.txt file can prevent all web crawlers / bots from crawling the

website.

All Web Crawlers

Allowed to Index All Content

This command in the Robots.txt file allows all search engine bots to crawl all the pages of your

site.

Add a Sitemap to a Robots.txt file

You can add your

sitemap to Robots.txt anywhere

What if we don't use Robots.txt file?

If we do not use any Robots.txt file, then there is no restriction on search engines as to where to

crawl and where not it can index everything that they find in your website.

This is also all for many websites, but if we talk about some good practice,

then we should use Robots.txt file because it makes it easier for search

engines to index your pages, and make them all pages again and again. There is

no need to go.

Awesome info thanks for sharing it.

ReplyDeleteThank you for your compliment and Your thought is very important for us. If you want any information from a related this, then you stay connected with us.

DeleteExcellent detail.

ReplyDeleteThank you realdigitalmarketer

Very nice blog. Thanks for sharing good informative content for Digital marketing.

ReplyDeleteDigital marketing company in Kochi

Digital marketing company in Kerala

It is amazing and Informative substance with pertinent and great data, awesome to visit your Blog social media marketing agency in chennai | branding companies in chennai

ReplyDeleteGreat information. thanks

ReplyDeleteSuch a nice article. An information overview of digital marketing in a single post. Those who live in Noida & want digital marketing. Here is a complete of digital marketing services and solution in Noida. Read here and know all the famous digital marketing consultant

ReplyDeleteThanks for sharing Digital marketing course with placement

ReplyDelete